Let’s face it. Web scraping can be a bit boring sometimes.

The general process is:

- Find site that has data

- Inspect element and see where data lives

- If in html table, write logic to parse table

- run for one page

- run on all pages

- fix minor bugs

- done

Each and every site has little quirks. Some people use “<table>” tags wrong and put column information in a normal <tr><td> row instead of using the <thead> tag. And then sometimes the website updates so you have to make more minor updates. Other people don’t and are still living pre-2000 with everything on their site a part of one big table.

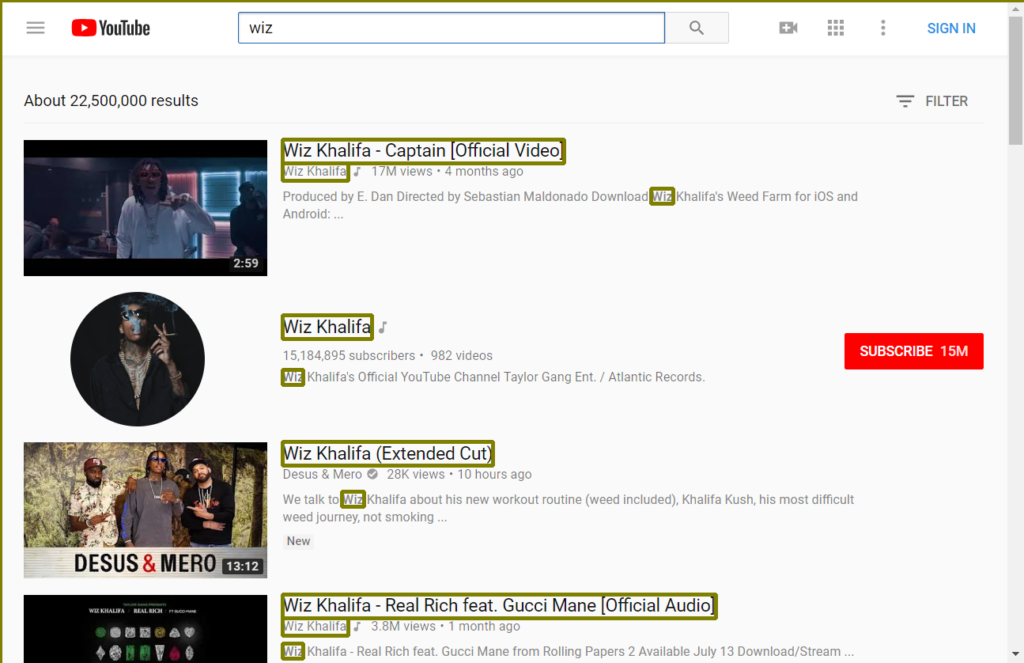

In this tutorial, I’m going to show how we can turn web scraping into an image based problem instead of being purely a DOM parsing exercise.

Setup

We’re going to be using Selenium to control our web browser and take snapshots.

You’ll need:

- python3 (I recommend conda distribution)

- opencv

- selenium

- PIL

- chrome

- chrome web driver (it has to be the matching web driver for your version of chrome. Best to have a non-updating separate install)

Go to the Jupyter notebook.

You can also generate videos by using the ffmpeg library and opencv.

How You Can Use This

Say you’re designing a computer vision system to extract information from the real world with the goal of replicating it digitally. Now you can generate training data and output data using HTML!

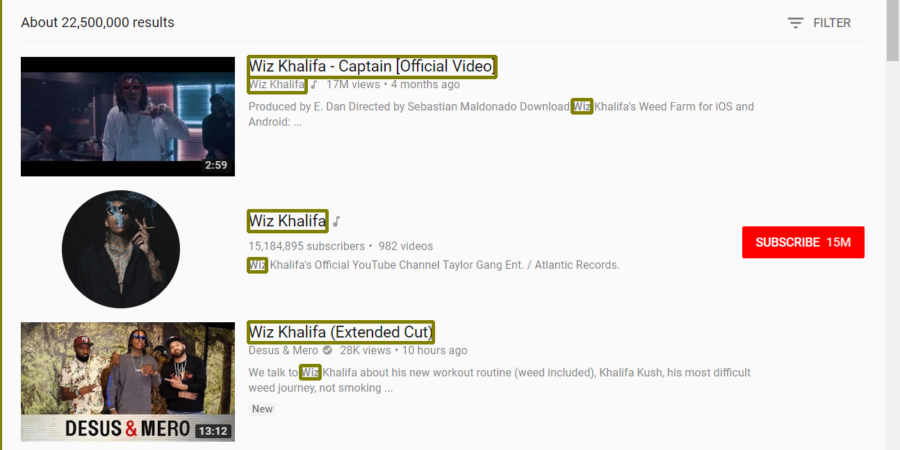

Or you want to build a web scraper to scrape sites that obscure the DOM and update frequently.

Or you want to build a more intelligent webscraper.